After reading the Concurrency chapter in Learning Go - An Idiomatic Approach to Real-World Go Programming, I was surprised by how different Golang’s approach to concurrency is compared to other programming languages. This inspired me to write a new blog post about concurrency in Golang.

Introduction to Concurrency

Concurrency refers to the concept in computer science of dividing a single process into independent parts and defining how these parts can safely share data. In most programming languages, concurrency is handled through libraries that use operating system threads, relying on locks to manage shared data. Go, however, takes a different approach.

Concurrency in computing allows different sections of a program to run independently, which can boost performance and optimize the use of system resources. It is especially useful in modern software, particularly for network services and applications that handle multiple user inputs

Generally, all programs follow a three-step process: they input data, process it, and produce an output. Whether or not to use concurrency in your program depends on how the data moves through these steps. In some cases, two steps can run concurrently if they don’t rely on each other’s data, while in other cases, they must run in sequence because one step depends on the output of the other. Concurrency is useful when combining results from multiple tasks that can run independently.

Concurrency Vs Parallelism

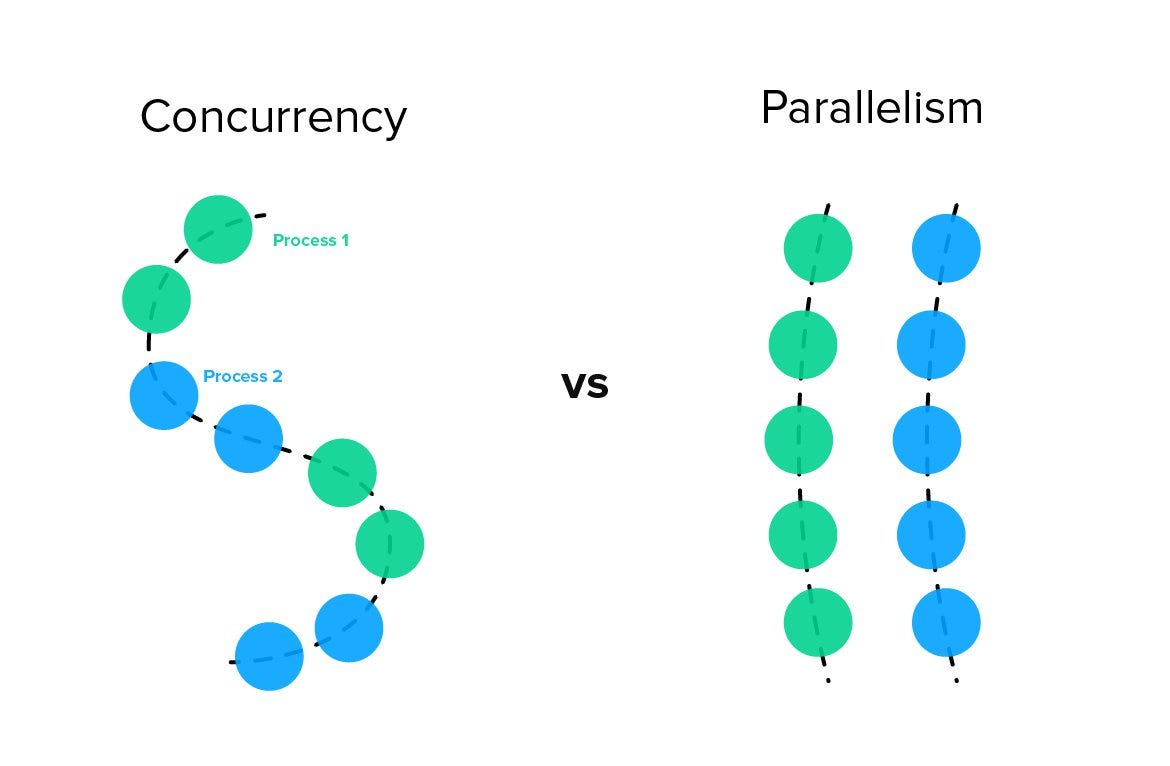

Concurrent and parallel programming are related but distinct concepts. Concurrent programming focuses on the logical structure and behavior of programs, while parallel programming deals with their physical execution and performance optimization. Concurrent programs can run on both single-core and multicore processors, whereas parallel programs require multicore or distributed systems. While concurrent programs can be parallel, not all are.

For instance, a web server handling multiple requests concurrently might not execute them in parallel if it’s running on a single processor. Similarly, parallel programs can be concurrent, but not always—for example, a matrix multiplication running on multiple cores may lack coordination or synchronization, making it non-concurrent.

Goroutines

A goroutine can be thought of as a lightweight thread, managed by the Go runtime. When a Go program starts, the runtime creates several threads and runs a single goroutine to execute the program. The Go runtime scheduler automatically assigns all goroutines, including the initial one, to these threads, similar to how the operating system schedules threads across CPU cores. While this might seem redundant since the OS already has a scheduler, it offers several advantages:

Creating goroutines is faster than creating threads, as they don’t rely on OS-level resources.

Goroutines start with smaller stack sizes, which can grow as needed, making them more memory efficient.

Switching between goroutines is quicker than switching between threads, as it occurs within the process, avoiding slower system calls.

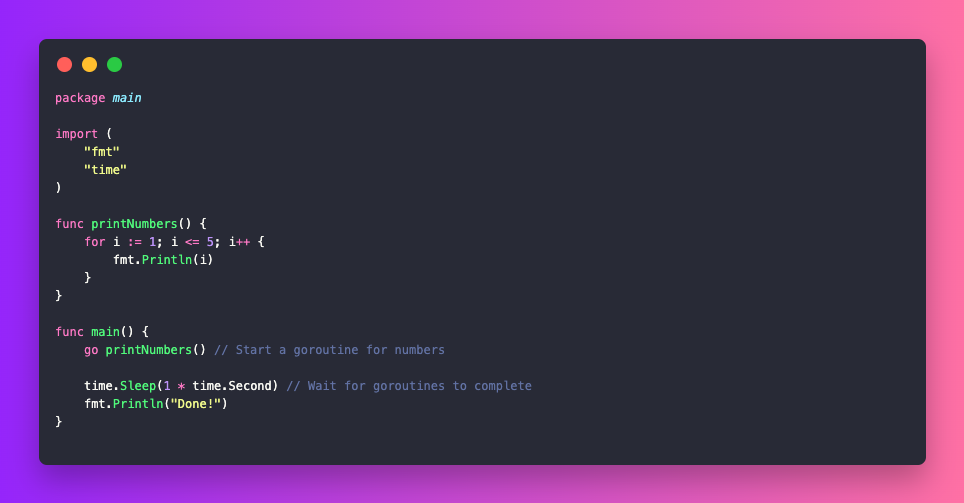

In this example, printNumbers() is a function that we run as a Goroutine using the go keyword. Both printNumbers() and the main function will run concurrently.

Channels

Goroutines use channels to communicate. Similar to slices and maps, channels are built-in types that are created with the make function:

ch := make(chan int)

Channels are reference types, meaning when you pass a channel to a function, you’re passing a pointer to it. Additionally, like slices and maps, an uninitialized channel has a zero value of nil.

The <- operator is used to interact with a channel. To read from a channel, place the <- operator to the left of the channel variable. To write to a channel, place it to the right:

a := <- ch

ch <- b

Each value sent to a channel can only be read once. If multiple goroutines are reading from the same channel, only one of them will receive the value written to the channel.

By default, channels are unbuffered. When a goroutine writes to an open, unbuffered channel, it must wait until another goroutine reads from that channel. Similarly, when a goroutine attempts to read from an open, unbuffered channel, it will pause until another goroutine writes to the channel. This means at least two goroutines must be running concurrently to perform writes or reads on an unbuffered channel.

Go also provides buffered channels, which can hold a limited number of writes without causing a block. If the buffer is full and no reads have occurred, any additional write attempts will cause the writing goroutine to pause until the channel is read. Likewise, reading from a buffered channel will block if the buffer is empty.

Using for-range with Channels

In a for-range loop over a channel, only a single variable is declared to represent the value received from the channel. If the channel is open and a value is available, it is assigned to this variable, and the loop body executes. If no value is available, the goroutine waits until one is received or the channel is closed. The loop runs until the channel is closed, or until a break or return statement is encountered.

for v := range ch {

fmt.Println(v)

}

Closing a Channel

When you’re finished writing to a channel, you can close it using the built-in close function:

close(ch)

After a channel is closed, any attempts to write to it or close it again will cause a panic. Interestingly, reading from a closed channel always works. If the channel is buffered and there are unread values, they will be returned in order. If the channel is unbuffered or all buffered values have been read, the zero value of the channel’s type is returned.

Behaviors of Channel

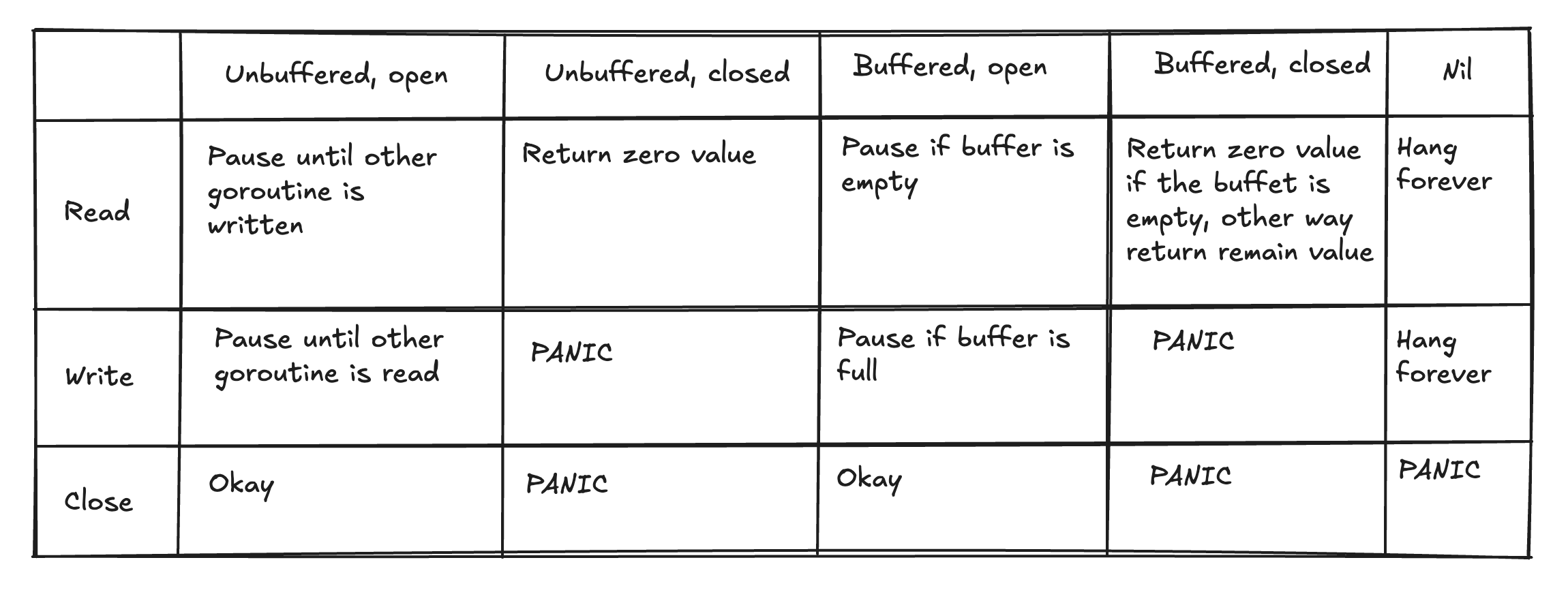

Channels have many states, each with a different behavior when reading, writing, or closing.

The above table will show exactly how behaviors of channel in Go.

Select

Go’s select statement is a powerful control structure that deals with multiple channel operations, providing the ability to operate on the first channel that's ready. It's akin to the switch statement but for channels.

In this example, the select statement in the loop awaits both goroutines return the value by channel

WaitGroups

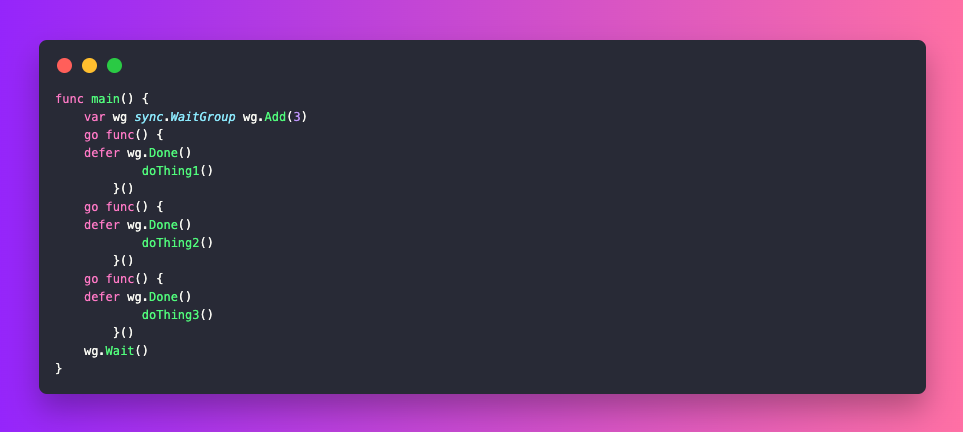

At times, a goroutine needs to wait for several other goroutines to finish their tasks. For waiting on a single goroutine, you can use the context cancellation pattern, as discussed earlier. However, when you’re waiting for multiple goroutines to complete, you should use a WaitGroup, which is part of the sync package in Go’s standard library.

A sync.WaitGroup doesn’t require explicit initialization—simply declaring it is enough since its zero value is ready to use. The sync.WaitGroup provides three main methods: Add, Done, and Wait. The Add method increases the counter for the number of goroutines to wait for, while Done decreases the counter and should be called when a goroutine completes. Wait pauses execution of the current goroutine until the counter reaches zero. Typically, Add is called once with the total number of goroutines, and Done is called inside each goroutine. To ensure Done is executed even if the goroutine encounters a panic, it is placed in a defer statement.

Conclusion

This article delved into Go’s concurrency tools, particularly Goroutines and Channels. We explored how Goroutines serve as lightweight threads managed by the Go runtime, how Channels enable communication and synchronization among Goroutines, and the utility of the select statement in managing multiple channel operations.

That is it for this article. I hope you found this article useful, if you need any help please let me know in the comment section.

Let's connect on Twitter and LinkedIn.

👋 Thanks for reading, See you next time